Azure Data Factory: 7 Powerful Features You Must Know

Unlock the full potential of cloud data integration with Azure Data Factory—a powerful, serverless service that orchestrates and automates data movement and transformation at scale. Whether you’re building ETL pipelines or managing complex workflows, this guide dives deep into everything you need to know.

What Is Azure Data Factory?

Azure Data Factory (ADF) is Microsoft’s cloud-based data integration service that enables organizations to create data-driven workflows for orchestrating and automating data movement and data transformation. It allows you to ingest, prepare, transform, and publish data using a managed platform in the cloud.

Core Definition and Purpose

Azure Data Factory acts as a central hub for integrating data from disparate sources, both on-premises and in the cloud. Its primary purpose is to streamline ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes without requiring infrastructure management.

- Enables hybrid data integration across cloud and on-premises systems.

- Supports both batch and real-time data processing.

- Integrates seamlessly with other Azure services like Azure Synapse Analytics, Azure Databricks, and Azure Blob Storage.

How Azure Data Factory Fits into Modern Data Architecture

In today’s data-driven world, organizations rely on data from multiple sources—databases, SaaS applications, IoT devices, and more. ADF plays a critical role in modern data architectures by acting as the orchestration layer that connects these systems.

“Azure Data Factory is not just a tool; it’s the backbone of scalable, cloud-native data pipelines.” — Microsoft Azure Documentation

It supports data lake architectures, data warehousing, and real-time analytics by enabling reliable, repeatable workflows that can be monitored and managed centrally.

Key Components of Azure Data Factory

To understand how Azure Data Factory works, it’s essential to explore its core components. Each component plays a specific role in building and managing data integration workflows.

Pipelines and Activities

A pipeline in Azure Data Factory is a logical grouping of activities that perform a specific task. Activities are the individual actions within a pipeline, such as copying data, transforming it using Databricks, or executing stored procedures.

- Copy Activity: Moves data from source to destination with built-in connectors.

- Transformation Activities: Includes HDInsight, Azure Functions, and Databricks jobs.

- Control Activities: Manage workflow logic (e.g., If Condition, ForEach, Execute Pipeline).

Linked Services and Datasets

Linked services define the connection information needed to connect to external resources. They are like connection strings but with additional metadata such as authentication methods and endpoints.

- Examples include Azure SQL Database, Amazon S3, Salesforce, and FTP servers.

- Datasets represent structured data within data stores. They define the data structure and location.

- Together, linked services and datasets are used by activities to read and write data.

Integration Runtime (IR)

The Integration Runtime is the compute infrastructure used by Azure Data Factory to provide data integration capabilities across different network environments.

- Azure IR: Runs in Azure and is used for cloud-to-cloud data movement.

- Self-Hosted IR: Installed on-premises or in a private network to enable secure data transfer between cloud and local systems.

- SSIS IR: Specifically designed to run SQL Server Integration Services (SSIS) packages in the cloud.

Azure Data Factory vs. Traditional ETL Tools

Understanding the differences between Azure Data Factory and traditional ETL tools like Informatica, Talend, or SSIS helps highlight its advantages in a modern cloud environment.

Architecture and Scalability

Traditional ETL tools often require dedicated servers and manual scaling. In contrast, Azure Data Factory is serverless and scales automatically based on workload demands.

- No need to provision or manage VMs or servers.

- Auto-scales during high-volume data transfers.

- Pay-as-you-go pricing model reduces cost overhead.

Cloud-Native Integration

Azure Data Factory is built for the cloud and integrates natively with Azure services like Azure Data Lake Storage, Azure SQL Data Warehouse (now Synapse), and Power BI.

- Direct connectivity to Azure services without complex configurations.

- Supports REST APIs and OAuth for SaaS integrations (e.g., Dynamics 365, Google Analytics).

- Enables hybrid scenarios via Self-Hosted Integration Runtime.

Development and Collaboration

ADF offers a visual interface through the Azure portal and supports Git integration for version control and team collaboration.

- Drag-and-drop pipeline designer simplifies development.

- CI/CD pipelines can be set up using Azure DevOps.

- Team members can collaborate in real time using Azure Repos.

Powerful Features of Azure Data Factory

Azure Data Factory stands out due to its rich set of features designed to handle complex data workflows efficiently and securely.

Data Flow – Code-Free Data Transformation

Azure Data Factory’s Data Flow feature allows users to perform transformations without writing code. It uses a visual interface to build data transformation logic using Spark under the hood.

- Supports data cleansing, aggregation, joins, and derived columns.

- Runs on auto-scaling Spark clusters managed by Azure.

- Enables debugging with data preview and streaming statistics.

“With Data Flows, even non-developers can build robust transformation pipelines.” — Microsoft Azure Blog

Mapping Data Flows vs. Wrangling Data Flows

There are two types of data flows in ADF: Mapping Data Flows and Wrangling Data Flows (now deprecated in favor of Power Query in Data Flows).

- Mapping Data Flows: Used for designing ETL/ELT pipelines with full control over schema and transformations.

- Wrangling Data Flows: Previously allowed Excel-like data preparation; now replaced by enhanced Power Query integration.

- Best practice is to use Mapping Data Flows for production pipelines.

Trigger-Based Pipeline Execution

Azure Data Factory supports multiple types of triggers to automate pipeline execution.

- Schedule Triggers: Run pipelines on a recurring schedule (e.g., daily, hourly).

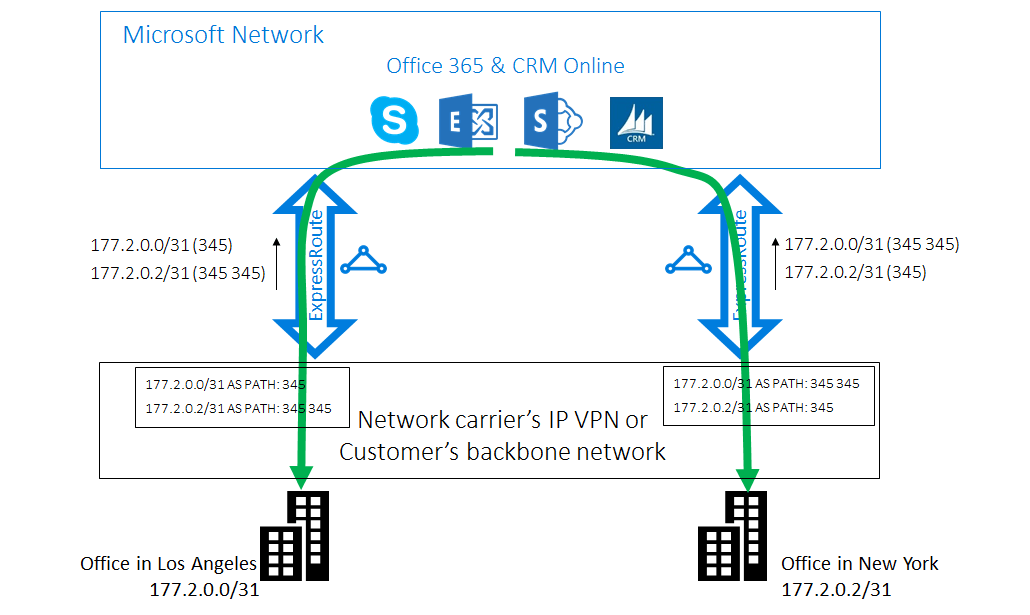

- Event-Based Triggers: Respond to events like file arrival in Blob Storage or Event Grid notifications.

- Tumbling Window Triggers: Ideal for time-based data processing (e.g., processing hourly data windows).

Integration with Other Azure Services

Azure Data Factory doesn’t work in isolation—it thrives when integrated with other Azure services to build end-to-end data solutions.

Azure Databricks Integration

You can invoke Databricks notebooks or JAR files from ADF pipelines to perform advanced analytics and machine learning.

- Pass parameters from ADF to Databricks for dynamic execution.

- Leverage Databricks’ Spark engine for heavy data processing.

- Monitor job status directly from ADF monitoring tools.

Learn more about integration: Azure Databricks Notebook Activity

Azure Synapse Analytics and SQL Pools

Azure Data Factory integrates tightly with Azure Synapse Analytics (formerly SQL Data Warehouse) to load and transform large datasets.

- Use PolyBase for high-speed data loading into dedicated SQL Pools.

- Orchestrate ELT workflows where transformation happens inside Synapse.

- Supports linked servers and T-SQL scripts via Stored Procedure activity.

Power BI and Data Visualization

After data is processed, ADF can trigger Power BI dataset refreshes to ensure dashboards are up to date.

- Use Azure Functions or Logic Apps in combination with ADF to call Power BI REST API.

- Automate end-to-end reporting pipelines from raw data to insights.

- Ensure data freshness by scheduling refreshes post-data-load.

Security and Compliance in Azure Data Factory

Security is paramount when dealing with enterprise data, and Azure Data Factory provides robust mechanisms to ensure data protection and regulatory compliance.

Role-Based Access Control (RBAC)

Azure Data Factory integrates with Azure Active Directory (AAD) and supports fine-grained access control.

- Assign roles like Data Factory Contributor, Reader, or Owner.

- Use Managed Identities for secure authentication without storing credentials.

- Integrate with Azure Key Vault to securely store connection strings and secrets.

Data Encryption and Network Security

All data in transit and at rest is encrypted by default in Azure Data Factory.

- SSL/TLS encryption for data in motion.

- Integration with Azure Private Link to access ADF over private networks.

- Supports Virtual Network (VNet) service endpoints for secure connectivity.

Compliance and Auditing

Azure Data Factory complies with major industry standards such as GDPR, HIPAA, ISO 27001, and SOC.

- Audit logs are available via Azure Monitor and Log Analytics.

- Track pipeline runs, user actions, and access patterns.

- Set up alerts for failed pipelines or unauthorized access attempts.

Monitoring and Troubleshooting Azure Data Factory

Effective monitoring is crucial for maintaining reliable data pipelines. Azure Data Factory provides comprehensive tools for tracking performance, diagnosing issues, and optimizing workflows.

Monitor Pipeline Runs in the Azure Portal

The Monitoring hub in the ADF portal gives real-time visibility into pipeline executions.

- View run history, duration, and status (Success, Failed, In Progress).

- Drill down into individual activity runs to see input/output and error messages.

- Filter by time range, pipeline name, or trigger type.

Using Azure Monitor and Log Analytics

For advanced monitoring, integrate ADF with Azure Monitor and Log Analytics.

- Collect diagnostic logs for pipelines, activities, and triggers.

- Create custom dashboards and KPIs.

- Set up alert rules based on failure rates or latency thresholds.

Explore monitoring setup: Monitor ADF with Azure Monitor

Common Issues and How to Resolve Them

Even well-designed pipelines can encounter issues. Here are common problems and solutions:

- Self-Hosted IR Offline: Check service status, firewall rules, and network connectivity.

- Copy Activity Fails: Validate credentials, check data format, and review network access.

- Slow Performance: Optimize copy settings (e.g., parallel copies, staging), use compression, or scale Integration Runtime.

Best Practices for Using Azure Data Factory

To get the most out of Azure Data Factory, follow these proven best practices for performance, reliability, and maintainability.

Design Modular and Reusable Pipelines

Avoid monolithic pipelines. Instead, break them into smaller, reusable components.

- Use parameters and variables to make pipelines dynamic.

- Leverage the Execute Pipeline activity to chain workflows.

- Create template pipelines for common operations (e.g., data load, archive).

Optimize Data Movement and Transformation

Efficient data movement reduces cost and improves performance.

- Use staging (e.g., Azure Blob Storage) when copying between different regions or formats.

- Enable compression and binary format (e.g., Parquet, ORC) for faster transfers.

- Leverage partitioning in source and sink to parallelize data reads/writes.

Implement CI/CD for Data Pipelines

Treat data pipelines like code. Use CI/CD to promote changes from dev to production safely.

- Use Git integration in ADF for version control.

- Set up release pipelines in Azure DevOps to deploy across environments.

- Validate configurations and test pipelines before deployment.

Real-World Use Cases of Azure Data Factory

Azure Data Factory is used across industries to solve real business challenges. Here are some practical examples.

Healthcare: Patient Data Integration

Hospitals use ADF to consolidate patient records from EMR systems, labs, and wearable devices into a centralized data lake for analytics.

- Integrate HL7 and FHIR data formats.

- Ensure HIPAA compliance with encryption and access controls.

- Enable real-time dashboards for patient monitoring.

Retail: Sales and Inventory Analytics

Retailers use ADF to combine point-of-sale data, e-commerce platforms, and supply chain systems.

- Process daily sales data from multiple stores.

- Sync inventory levels across warehouses and online platforms.

- Feed data into Power BI for executive reporting.

Finance: Regulatory Reporting

Banks use ADF to automate the collection and transformation of financial data for regulatory compliance (e.g., Basel III, MiFID II).

- Extract data from core banking systems and data warehouses.

- Apply business rules and validations using Data Flows.

- Generate reports and submit them on schedule using triggers.

Getting Started with Azure Data Factory

Ready to start using Azure Data Factory? Here’s how to begin building your first pipeline.

Step-by-Step: Create Your First Pipeline

Follow these steps to create a simple data copy pipeline:

- Log in to the Azure portal and create a new Data Factory resource.

- Open the ADF authoring interface (Data Factory Studio).

- Create linked services for your source (e.g., Azure Blob Storage) and sink (e.g., Azure SQL Database).

- Define datasets for the source and destination files/tables.

- Add a Copy Activity to the pipeline and configure source and sink.

- Debug the pipeline, then publish and trigger a run.

Learning Resources and Documentation

Microsoft provides extensive documentation and tutorials to help you master ADF.

- Official Azure Data Factory Documentation

- Video Tutorials on Microsoft Learn

- Hands-on labs available on Microsoft Learn.

Community and Support

Join the Azure community to get help and share knowledge.

- Microsoft Q&A: Ask questions about ADF

- Stack Overflow: Use tags like #azure-data-factory.

- GitHub samples: Explore open-source ADF templates and scripts.

What is Azure Data Factory used for?

Azure Data Factory is used to create, schedule, and manage data integration workflows that move and transform data from various sources to destinations. It’s ideal for ETL/ELT processes, data migration, and building data pipelines for analytics.

Is Azure Data Factory serverless?

Yes, Azure Data Factory is a serverless service. You don’t need to manage infrastructure—compute resources are automatically provisioned when running pipelines.

How much does Azure Data Factory cost?

Azure Data Factory uses a pay-as-you-go model. Costs depend on pipeline activity runs, data movement, and Data Flow execution. There’s a free tier with limited monthly activity runs.

Can Azure Data Factory replace SSIS?

Yes, Azure Data Factory can replace SSIS, especially with the SSIS Integration Runtime, which allows you to lift and shift existing SSIS packages to the cloud.

How do I monitor pipelines in Azure Data Factory?

You can monitor pipelines using the Monitoring tab in the ADF portal, Azure Monitor, Log Analytics, or by setting up alerts for failures and performance metrics.

Azure Data Factory is a powerful, flexible, and secure platform for modern data integration. From simple data movement to complex transformation workflows, it empowers organizations to build scalable, cloud-native data pipelines. By leveraging its rich features, seamless Azure integration, and robust monitoring, businesses can unlock the full value of their data. Whether you’re migrating from on-premises ETL tools or building new analytics solutions, Azure Data Factory provides the foundation for data-driven success.

Recommended for you 👇

Further Reading: